<TL; DR> I’ve built a TCPSyphon Server myself in Java and tried to circumvent some of the flaws of the original Version </TL; DR>

Maybe you heard about Syphon. “Syphon is an open source Mac OS X technology that allows applications to share frames – full frame rate video or stills – with one another in realtime” (Link). It was originally intended to only work locally and not be shared between different Hosts on a Network. Fortunately someone took care of this and built a set of applications to share “Syphon data” between multiple Computers. Actually it doesn’t: It renders local Syphon data into single images (jpeg, for example), applies a compression and sends them over the network. Anyways, this is one of the applications you don’t get around as a VJ. You just HAVE to have it: TCPSyphon.

The person behind the TCPSyphon-apps also built a client for the Raspberry Pi. The TCPSClient. Now things become really interesting.

TCPSClient for Raspberry Pi from techlife on Vimeo.

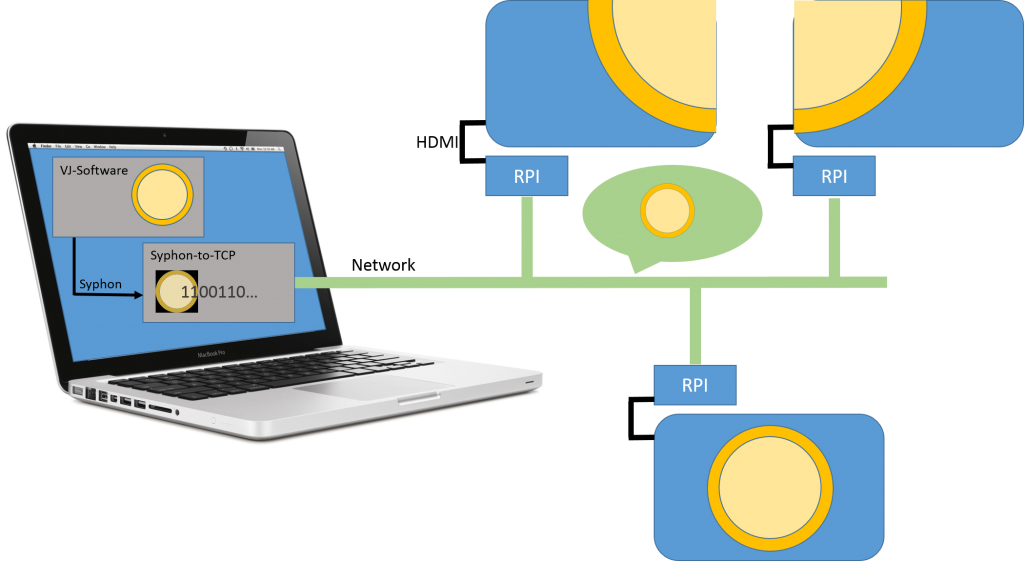

The Basic Operation behind this is simple: You have a computer running TCPSyphon (mac)/ TCPSpout (win) Server and a bunch of Raspberry Pis receiving the stream and displaying it. Everything is handled via ZeroConfig so you don’t even have to care about IP-addresses, hostnames etc as long as everything’s in the same network. In general, things just work.

While testing out the 2nd beta Version I had a conversation with the developer about some ideas that made their way into to the current version (beta 3 as time of this writing): It is now possible to only show parts of the incoming Syphon stream. In theory you could build a videowall with nothing more than a few RPIs (..yeah well, … ‘in theory’. …)

[tube]https://www.youtube.com/watch?v=fvICJc0TAjc[/tube]

[tube]https://www.youtube.com/watch?v=vXSc5JcJYF8&feature=youtu.be[/tube]

The image gives an overview about what’s happening. Visual data are created and converted into network-data on a computer. The datastream is sent to one or more RPIs running the TCPSClient which converts the data back into images and displays them via projectors, screens, etc. A Raspberry Pi running the TCPSClient can either display the complete image or only parts of the incoming image. This. Is. Very. Cool. Very very cool.

The pros:

-Transmitting video has become a matter of Ethernet cabling. Ethernet cables are cheaper, easier to get and way more robust than HDMI.

-Displaying parts of a Video on multiple screens becomes cheap ‘n easy. In theory you don’t need a Triplehead2Go or Datapath X4 anymore

-Everything has an absolute minimum latency of just a few frames. Absolutely no comparison to other solutions.

The cons:

Though in theory Syphon doesn’t show limitations when being used locally, there surely ARE some limiting factors when using it on a network in conjunction with a Raspberry PI.

-The original TCPSyphon Server application doesn’t allow sending data to dedicated clients but sends everything to every device that gets to be known via ZeroConf. This means that even though an RPI might only show parts of the original Syphon stream it has to deal with the full stream coming in via network.

-Limited stream size (I): the TCPSClient incorporates the features of the RPI’s GPU to display the images. Destiny has it that images are not allowed to be bigger than 2048*2048 pixels. If you send lager streams to the TCPSClient it crashes.

-Limited stream size (II): The Ethernet interface of the RPI is connected via its internal USB bus. Officially it’s an 100 mbit interface. In reality it’s a lot slower. I tried different configurations but the maximum datastream that can be sent to an RPI should NOT exceed 720p and a compression rate of ~75% otherwise the display will stutter and things will become really ugly.

In the beginning I had the idea of creating a Syphon stream containing the image data for four (or more) screens and send it over the network. Every RPI running an instance of the TCPSClient would pick its portion and display it in FullHD – or 720p at least. Taking the limiting factors into consideration it became obvious that this isn’t possible at all.

There are, in theory, possible ways to circumvent these flaws. One of the ideas that I had involved a redesign of the TCPSyphon Server application. I sent some mails to the developer but obviously I had a few too many (probably stupid) questions at the beginning and he soon completely refused answering me on any topic. There is a github repository containing an SDK and some basic examples but the interesting bits (ZeroConf, compression, encapsulation into network packets ) are all hidden within a compiled binary only. So I had to take care of this myself.

After spending way too much time with pen, paper and wireshark I finally figured out the requirements to build a custom TCPSyphon Server. I used Java for this but anything should do.

First up, you need to get yourself known. Meaning: Register the server and its service via ZeroConf. In this example I’m using jmDns. The used network port is 59852 but this is just one of the possible ports that I encountered while analyzing network traffic between my computer and an RPI:

public void registerBonjour() {

try {

JmDNS jmdns = JmDNS.create();

ServiceInfo info = ServiceInfo.create("_tl_tcpvt.", "TCPSyphonXY", 59852, 0, 0, "");

jmdns.registerService(info);

System.out.println("Registered JmDNS, Application ready");

} catch (Exception e) {

System.out.println("registerBonjour():" + e.getMessage());

}

}

Doing this will make the TCPSClient contact the server via the given port as soon as it (the client) is started. You can then send frames to the client. Every frame is displayed instantly. Every frame consists of an (jpg-compressed) image with an extra 16 byte header. The bytes of the header have the following meaning:

byHeader[0]; //Compression: JPEG: 0x00 JPEG_Glitch: 0x01 RAW: 0x02 PNG: 0x03 TURBOJPEG: 0x04 byHeader[1]; byHeader[2]; byHeader[3]; byHeader[4]; // width of image, LO byte byHeader[5]; // width of image, HI byte byHeader[6]; byHeader[7]; byHeader[8]; // height of Image, LO byte byHeader[9]; // height of image, HI byte byHeader[10]; byHeader[11]; byHeader[12]; // size of compressed image + 16, HI'est byte byHeader[13]; // size of compressed image + 16 byHeader[14]; // size of compressed image + 16 byHeader[15]; // size of compressed image + 16, LO'est byte

The compressed size of the image is increased by 16. This is the size of the header that we have to add to the overall size. The author’s favourite compression is TurboJpeg so I will base my example on this. In order to use this compression (in Java) you need to get the necessary files here.

/**

* Compresses an image using libjpeg turbo. The image will probably come from a BufferedImage that

* has been converted into a byte array. pFromBufferedImage is the byte array containing the image data.

* pWidth ist the original image's width, pHeight describes the image's height.

*

* pCompression ranges from 0..100 with 100 being the highest quality.

*

*/

private byte[] compressImage(byte[] pFromBufferedImage, int pWidth, int pHeight, int pCompression){

byte[] byReturn = null;

tjc = new TJCompressor();

tjc.setJPEGQuality( pCompression );

tjc.setSubsamp(TJ.SAMP_420);

try{

byReturn = tjc.setSourceImage(pFromBufferedImage, 0, 0, pWidth, 0, pHeight, TJ.PF_RGBA);

//This value needs to be split into 4 bytes. Use it to fill the header's last 4 bytes.

//Notice how the necessary 16 extra bytes are already taken into consideration

int iCompressedSize = tjc.getCompressedSize() + 16;

}catch(){

System.out.println("compressImage():" + e.getMessage());

}

return byReturn;

}

After having constructed the image we now need to fill the TCPSyphon-header with the correct data. We used libjpeg-turbo so the first byte will be 0x04. The image’s original width and height have to be converted into two-byte-values. Taking an image with the size of 800*600 pixels this will result in

byHeader[0] = (byte)0x04; //Compression: TURBOJPEG: 0x04 byHeader[1] = 0; byHeader[2] = 0; byHeader[3] = 0; byHeader[4] = (byte)0x20; // width of image, LO byte byHeader[5] = (byte)0x03; // width of image, HI byte byHeader[6] = 0; byHeader[7] = 0; byHeader[8] = (byte)0x58; // height of Image, LO byte byHeader[9] = (byte)0x02; // height of image, HI byte ...

Please notice that the actual size of the compressed image (the header’s last 4 bytes) changes on a per-image base because it’s dependent on the content of the image. After having filled the TCPSyphon-header we need to create a new byte array containing the header + image and send this to the TCPSClient via the port we used to register our service ( 59852 ). This is just simple TCP-stuff. I won’t copy-paste this here.

Using these pieces of information and building a quick project around it I was able to send video/ image data from my Ubuntu machine to a Raspberry PI running the TCPSClient. The example shows an OpenGL-rendered rotating square (using LWJGL). The images where converted into TCPSyphon-compatible byte arrays and sent to two RPIs, each of them running the TCPSClient in basic configuration.

[tube]https://www.youtube.com/watch?v=-gvXbArtvYY&feature=youtu.be, 1280, 720[/tube]

After having the basic things ready I went head-first into the JSyphon example project and added the functionality I had in mind. What came out is basically (an early beta of ) a rebuild of the original TCPSyphon Server application. In contradiction to the original I do not render the Syphon stream offscreen but in a visible full size window. This didn’t show to be more CPU-consuming and it helped me find a few bugs etc. As an addon I implemented my initial idea and do not send the complete Syphon Stream to every Raspberry but split the stream into 4 quads and send each quad to a dedicated Raspberry running a TCPSClient .

This means: I have a 4*720p Syphon Stream running locally (created with VDMX5) which is rendered into 4 individual parts (every part equals a 720p stream) which are sent to individual TCPSClient instances. This way every Raspberry receives its own 720p video stream without having to pick out parts and without having the need to upscale them.

[tube]https://www.youtube.com/watch?v=34WQ821KfP0, 1280, 720[/tube]

Well… what can I say. There is a ~slightly~ recognizable performance issue. It turned out that rendering the Syphon data and sending the TCPSyphon data to each client is not much of a problem but using libjpeg-turbo to render 4 jpg-images from every new Syphon frame really brings my machine to its limits. Playing around with different compression values didn’t help either. (of course I finetuned my code. I do not instantiate TJCompressor for every new image like in the example stated earlier….). the JPEG rendering is the de-facto showstopper at the moment. I will be trying to implement simple JPEG compression in a next version but I don’t excpect things to improve drastically (as it would surely be necessary).

However, I count this as a win. Even though this is not a high-performance-video-propagation-system (yet) it’s good to know the basics behind it. Now the doors are open to build other things around this. Maybe another digital signage system completely based on linux, maybe some fallback-system for VJ performances, etc etc. It should, for example, be relatively easy to build a TCPSyphon plugin for VLC now. This way everybody who has to deal with multiple video screens -and not only VJs- could benefit from this system. Ideas are coming.

This REALLY took me some time to figure this all out. It’s only one sentence ‘…pen and paper and wireshark…’ but in reality there are a couple of weekends and some veery long evenings spent on this. I am currently looking for a new job (*hint* *hint*) and should better have spent this time organizing my CV etc. But I didn’t. Things like these are more important to me. It’s good to have your priorities set=).

Just to make things clear. Some might say that I ‘hacked’ or ‘reverse engineered’ the original product which might be… not okay. I don’t think so. The TCPSClient in its current form is free to use, Syphon is Open Source, the Raspberry PI is (mostly) Open Source. This project, in my eyes, is nothing more than an extension to an existing product. All credit surely goes to Techlife.sg for providing the TCPSyphon apps and the TCPSClient. I strongly encourage you to visit their website, buy their products and make a donation via PayPal.